Sunday, July 27, 2008

SQL Server Performance Checklist

Documented the SQL Server Performance Checklist in Excel Spreadsheet format .

Saturday, July 19, 2008

SQL Server 2005 SP2 installation failed on Active/Passive Cluster

SP2 fails to install database services - MSP Error: 29512 “Unable to install Windows Installer MSP file”

Errors from Summary.log

----------------------------------------------------------------------------------

Product : Database Services (SQL084)

Product Version (Previous): 2153

Product Version (Final) :

Status : Failure

Log File : C:\Program Files\Microsoft SQL Server\90\Setup Bootstrap\LOG\Hotfix\SQL9_Hotfix_KB921896_sqlrun_sql.msp.log

Error Number : 29512

Error Description : MSP Error: 29512 SQL Server Setup was unable add user pbs\csparq_service to local group AU\SGG-GM_ServiceAccounts.

----------------------------------------------------------------------------------

Configuration failed.

One or more products failed to install, see above for details

Exit Code Returned: 29512

Errors from SQL9_Hotfix_KB921896_sqlrun_sql.msp.log

MSI (s) (68:4C) [00:21:37:156]: Product: Microsoft SQL Server 2005 (64-bit) - Update 'Service Pack 2 for SQL Server Database Services 2005 (64-bit) ENU (KB921896)' could not be installed. Error code 1603.

Additional information is available in the log file C:\Program Files\Microsoft SQL Server\90\Setup Bootstrap\LOG\Hotfix\SQL9_Hotfix_KB921896_sqlrun_sql.msp.log.

Local group AU\SGG-GMRISK_ServiceAccounts doesn't exist

Failure adding user pbs\acoe_csparq_service to local group AU\GMRISK_ServiceAccounts (5)

Error Code: 0x80070005 (5)

Windows Error Text: Access is denied.

Source File Name: sqlca\sqlsecurityca.cpp

Compiler Timestamp: Sat Oct 7 09:43:41 2006

Function Name: Do_sqlGroupMember

Source Line Number: 1132

MSI (s) (68:4C) [00:21:37:156]: Note: 1: 1729

MSI (s) (68:4C) [00:21:37:156]: Transforming table Error.

MSI (s) (68:4C) [00:21:37:156]: Note: 1: 2262 2: Error 3: -2147287038

MSI (s) (68:4C) [00:21:37:188]: Transforming table Error.

MSI (s) (68:4C) [00:21:37:188]: Transforming table Error.

Workaround:

Errors from Summary.log

----------------------------------------------------------------------------------

Product : Database Services (SQL084)

Product Version (Previous): 2153

Product Version (Final) :

Status : Failure

Log File : C:\Program Files\Microsoft SQL Server\90\Setup Bootstrap\LOG\Hotfix\SQL9_Hotfix_KB921896_sqlrun_sql.msp.log

Error Number : 29512

Error Description : MSP Error: 29512 SQL Server Setup was unable add user pbs\csparq_service to local group AU\SGG-GM_ServiceAccounts.

----------------------------------------------------------------------------------

Configuration failed.

One or more products failed to install, see above for details

Exit Code Returned: 29512

Errors from SQL9_Hotfix_KB921896_sqlrun_sql.msp.log

MSI (s) (68:4C) [00:21:37:156]: Product: Microsoft SQL Server 2005 (64-bit) - Update 'Service Pack 2 for SQL Server Database Services 2005 (64-bit) ENU (KB921896)' could not be installed. Error code 1603.

Additional information is available in the log file C:\Program Files\Microsoft SQL Server\90\Setup Bootstrap\LOG\Hotfix\SQL9_Hotfix_KB921896_sqlrun_sql.msp.log.

Local group AU\SGG-GMRISK_ServiceAccounts doesn't exist

Failure adding user pbs\acoe_csparq_service to local group AU\GMRISK_ServiceAccounts (5)

Error Code: 0x80070005 (5)

Windows Error Text: Access is denied.

Source File Name: sqlca\sqlsecurityca.cpp

Compiler Timestamp: Sat Oct 7 09:43:41 2006

Function Name: Do_sqlGroupMember

Source Line Number: 1132

MSI (s) (68:4C) [00:21:37:156]: Note: 1: 1729

MSI (s) (68:4C) [00:21:37:156]: Transforming table Error.

MSI (s) (68:4C) [00:21:37:156]: Note: 1: 2262 2: Error 3: -2147287038

MSI (s) (68:4C) [00:21:37:188]: Transforming table Error.

MSI (s) (68:4C) [00:21:37:188]: Transforming table Error.

Workaround:

Quality

ETVX: Entry, Task, Verification, and Exit (software process developed by IBM)

Quality ensured by:

--------------------

Test, WP Review, Audit, PMPR

PMPR : Post Milestone Project Review

Configuration Managment:

-------------------------

Configurable item

Baseline : Frozen work product

Levels of Control

-------------------

Formal Control: Should go thru Change Management

WP delivered to client

Managed & Controlled:

Internal Project documentation

RASIC Chart

--------------

The designations in the approach are defined as follows:

* Responsible: those solely and directly accountable for creating a work product

* Approving: the party (or parties) that reviews and assures the work product's quality

* Supporting: individuals or groups who help to create the work product

* Informed: those who are to be kept informed about proceedings

* Consulted: those who help design the product or put in place quality review criteria

X axis - Roles

Y Axis - Activities

Goals

----------

Helps on defining goals

GQM

Goal - Question - Metrics

SMART

Specific

Measurable

Action Oriented / Aggressive yet attainable

Relevant to Clients expectation / Relevant

Time bound

CoQ - Cost of Quality

---------------------

The "cost of quality" isn't the price of creating a quality product or service. It's the cost of NOT creating a quality product or service.

Root cause analysis

--------------------

Cause and Effect / Fishbone / Ishikawa Diagram

5 Whys

5 W & 2 H

Primitive Metrics are basic data

------------------------------------

1. Size

Measure how big the application / Product is.

Using of

Function Point - FP

Lines of Code - LOC

Pages of Documentation - POD

2. Effort

is the mo.of labor hours expended to complete project and production support tasks.

helps on measuring the Productivity

helps determining Staff needed for a project

the effort needed depends on the size of the task, complexity, experience, and organization tools and processes.

Effort drives cost

Analysing of efforts involves Time Tracking

Project effort is not the same as billable effort - metric collection and billing have difference objectives

Time tracking ( SAP, PIV, MS Project) accounts for Project time, production support time and administrative time

3. Staff

is the no.of people assigned to a project for a given reporting period

Peak Staff:

The total count of individual who are allocated to and worked on a project during report period.

Peak staff must be a whole number

Count any person assigned to more than one project as one person for any and all of the projects which he or she is assigned.

FTE ( Full Time Equivalent)

a measure of the resources (people) who are allocated during a month.

4. Duration

Duration is the number of calendar days from the defined measurement ‘start date’ of the project to the project measurement ‘end date,’ less any hold time, defined in number of calendar days

5. Defects

A defect is a flaw in the product, system, or service that fails to perform its required functions

helps on assessing Quality & Reliablity

Pre-Release Defect

6. Change

Change is the measure of volatility of a system/application and of project requirements

7. Computer Resources

computing requirement for use by a project in the development of a system or to support the production environment.

Derived metrics form the Basic Primitive data

-----------------

1. Productivity

2. Delivery Rate

3. Defect Density

4 Estimate Accuracy

Defect & Rework

--------------------

Standard defect-type classification

------------------------------------

Requirement Error

Design Error

Coding Error

Testing Error

Third party Error

Documentation Error

H/W or Systems Software

Human Intervention Error

Project Management Error

Process/ Standard Error

Other

Severity of defects

-------------------

1 - Catastrophic

2 - Major ( without workaround)

3 - Major ( with workaround)

4 - Minor

5 - Cosmetic

6 - Internal

Service Performance Indicators (SPI)

Information Engineering Metrics Standards and Guidelines (S&G)

Production Support

-------------------

Safe & Escape

Differnce between Verification & Validation

Verification:

--------------

Verification ensures that you are building a product according to its requirements, specifications, and standards. For Verification, you should ask the following questions:

Are you meeting the specified requirements?

Are you building the product right?

Verification is CORRECTNESS.

Example:

performed by the developers on not on intended environment

Validation:

-------------

Validation ensures that your product will be usable once it is in its intended environment. For Validation, you should ask the following questions:

Are you meeting the operational need?

Does this product meet its intended use in the intended environment?

Are you building the right product?

performed by the end users on intended environment

Validation is TRUTH.

Example: UAT

TRUTH is something absolute which cannot be interpretted differently.

Correctness is relative to the system as presented.

When prototypes are developed to ensure that specific requirements can be addressed, it is an example of a verification practice. When end users are asked to evaluate prototypes to ensure that the product will meet their needs, it is an example of a validation practice.

Earned Value Management

Basic on Earned Value Management

CMMi Level

Objective of High Maturity Model

Control: Understand and control process varaince

Predict: Predict Performance from process capability

Improve: Constantly improve process capability

Saturday, July 12, 2008

SQL Server Performance baseline

1 Find Objects using Cursors

-------------------------------------

SELECT object_name(id) FROM syscomments

WHERE text LIKE '%DECLARE%CURSOR%'

2. Find views using Views

-------------------------------------------

Select * from sysComments where text like '%from v%' and type = 'V'

3. TempDB Usage History at instance level

----------------------------------------------

SELECT getdate(),

SUM(user_object_reserved_page_count) * 8 as user_objects_kb,

SUM(internal_object_reserved_page_count) * 8 as internal_objects_kb,

SUM(version_store_reserved_page_count) * 8 as version_store_kb,

SUM(unallocated_extent_page_count) * 8 as freespace_kb

FROM sys.dm_db_file_Space_Usage

where database_id = 2

4. TempDB Usage History at object level

--------------------------------------------

SELECT

obj.objectid as [Object_Id],

getdate() as SampleDateTime,

sum(tmp.user_objects_alloc_page_count) AS user_objects_alloc_page_count,

sum(tmp.user_objects_dealloc_page_count) AS user_objects_dealloc_page_count,

sum(tmp.internal_objects_alloc_page_count) AS internal_objects_alloc_page_count,

sum(tmp.internal_objects_dealloc_page_count) AS internal_objects_dealloc_page_count

FROM sys.dm_db_task_space_usage AS tmp

LEFT OUTER JOIN sys.dm_exec_requests AS req

ON tmp.session_id = req.session_id

OUTER APPLY sys.dm_exec_sql_text(req.sql_handle) AS obj

WHERE tmp.session_id > 50

AND obj.objectid is not null

Group BY obj.ObjectId

5. Object Block history

-----------------------------------

SELECT s.object_id

, SampleDateTime = getdate()

, indexname=i.name, i.index_id

, [block_pct]=cast (100.0 * row_lock_wait_count / (1 + row_lock_count) as numeric(15,2))

, row_lock_wait_ms = row_lock_wait_in_ms

, [avg_row_lock_waits_ms]=cast (1.0 * row_lock_wait_in_ms / (1 + row_lock_wait_count) as numeric(15,2))

from sys.dm_db_index_operational_stats (db_id(), NULL, NULL, NULL) s

,sys.indexes i

where objectproperty(s.object_id,'IsUserTable') = 1

and i.object_id = s.object_id

and i.index_id = s.index_id

And row_lock_wait_count <> 0

6. CPU Usage History

----------------------------

SELECT

objectid as [Object_Id]

, getdate() As SampleDateTime

, total_cpu_time

, total_execution_count

, number_of_statements

FROM (

SELECT

qs.sql_handle

, sum(qs.total_worker_time) as total_cpu_time

, sum(qs.execution_count) as total_execution_count

, count(*) as number_of_statements

FROM

sys.dm_exec_query_stats qs

GROUP BY qs.sql_handle ) dt

Cross Apply sys.dm_exec_sql_text(dt.sql_handle) eqp

WHERE ObjectId is not null

7. I/O Usage History

-----------------------------------

Select ObjectId As [Object_ID], GetDate() as SampleDateTime

, (total_logical_reads/Cast(execution_count as Decimal(38,16))) as avg_logical_reads

, (total_logical_writes/Cast(execution_count as Decimal(38,16))) as avg_logical_writes

, (total_physical_reads/Cast(execution_count as Decimal(38,16))) as avg_physical_reads

, Execution_Count

from (

select

Sum(total_logical_reads) as total_logical_reads

, Sum(total_logical_writes) as total_logical_writes

, Sum(total_physical_reads) as total_physical_reads

, Sum(execution_count) as execution_count

, sh.objectid

from sys.dm_exec_query_stats

Cross Apply sys.dm_exec_sql_text(sql_Handle) sh

Where objectId is not null

Group By objectid

) SubQry

8. Table Data Growth volume History

------------------------------------------

DECLARE @START_DATE datetime,

@END_DATE datetime

SET @START_DATE = getdate()

SET @END_DATE = getdate()

IF @START_DATE = @END_DATE

SET @END_DATE = Convert(varchar(10), DateAdd(day, 1, @END_DATE), 101)

SELECT startdt.object_id, object_name(startdt.object_id) as [Object_Name], startdt.[RowCount] as Starting_Row_Count,

enddt.[RowCount] as Ending_Row_Count,

pct_Growth = case when startdt.[rowcount] = 0 then 0

else (enddt.[rowCount] - startdt.[rowCount])/Convert(Decimal(38, 16), startdt.[rowcount])

end

FROM Benchmark_Table_DataVolumeHistory startdt

INNER JOIN (

Select object_id, min(SampleDateTime) as minSampleDateTime,

max(SampleDateTime) As maxSampleDateTime

FROM Benchmark_Table_DataVolumeHistory

WHERE SampleDateTime >= Convert(varchar(10), Getdate(), 101)

And SampleDateTime < @END_DATE

GROUP BY object_Id

) sub

ON startdt.object_id = sub.object_id

and startdt.SampleDateTime = minSampleDateTime

INNER JOIN BenchMark_Table_DataVolumeHistory enddt

ON enddt.object_id = sub.object_id

and enddt.SampleDateTime = maxSampleDateTime

SELECT UseCaseDesc, startdt.object_id, object_name(startdt.object_id) as [Object_Name], startdt.[RowCount] as Starting_Row_Count,

enddt.[RowCount] as Ending_Row_Count,

pct_Growth = case when startdt.[rowcount] = 0 then 0

else (enddt.[rowCount] - startdt.[rowCount])/Convert(Decimal(38, 16), startdt.[rowcount])

end

FROM Benchmark_Table_DataVolumeHistory startdt

INNER JOIN (

Select uc.UseCaseDesc, hist.object_id, min(SampleDateTime) as minSampleDateTime,

max(SampleDateTime) As maxSampleDateTime

FROM Benchmark_Table_DataVolumeHistory hist

INNER JOIN Benchmark_useCase_Object_assn ua

ON hist.object_id = ua.object_id

inner join benchmark_usecase uc

on ua.usecaseid = uc.usecaseid

WHERE SampleDateTime >= Convert(varchar(10), Getdate(), 101)

And SampleDateTime < @END_DATE

GROUP BY hist.object_Id, usecasedesc

) sub

ON startdt.object_id = sub.object_id

and startdt.SampleDateTime = minSampleDateTime

INNER JOIN BenchMark_Table_DataVolumeHistory enddt

ON enddt.object_id = sub.object_id

and enddt.SampleDateTime = maxSampleDateTime

-------------------------------------

SELECT object_name(id) FROM syscomments

WHERE text LIKE '%DECLARE%CURSOR%'

2. Find views using Views

-------------------------------------------

Select * from sysComments where text like '%from v%' and type = 'V'

3. TempDB Usage History at instance level

----------------------------------------------

SELECT getdate(),

SUM(user_object_reserved_page_count) * 8 as user_objects_kb,

SUM(internal_object_reserved_page_count) * 8 as internal_objects_kb,

SUM(version_store_reserved_page_count) * 8 as version_store_kb,

SUM(unallocated_extent_page_count) * 8 as freespace_kb

FROM sys.dm_db_file_Space_Usage

where database_id = 2

4. TempDB Usage History at object level

--------------------------------------------

SELECT

obj.objectid as [Object_Id],

getdate() as SampleDateTime,

sum(tmp.user_objects_alloc_page_count) AS user_objects_alloc_page_count,

sum(tmp.user_objects_dealloc_page_count) AS user_objects_dealloc_page_count,

sum(tmp.internal_objects_alloc_page_count) AS internal_objects_alloc_page_count,

sum(tmp.internal_objects_dealloc_page_count) AS internal_objects_dealloc_page_count

FROM sys.dm_db_task_space_usage AS tmp

LEFT OUTER JOIN sys.dm_exec_requests AS req

ON tmp.session_id = req.session_id

OUTER APPLY sys.dm_exec_sql_text(req.sql_handle) AS obj

WHERE tmp.session_id > 50

AND obj.objectid is not null

Group BY obj.ObjectId

5. Object Block history

-----------------------------------

SELECT s.object_id

, SampleDateTime = getdate()

, indexname=i.name, i.index_id

, [block_pct]=cast (100.0 * row_lock_wait_count / (1 + row_lock_count) as numeric(15,2))

, row_lock_wait_ms = row_lock_wait_in_ms

, [avg_row_lock_waits_ms]=cast (1.0 * row_lock_wait_in_ms / (1 + row_lock_wait_count) as numeric(15,2))

from sys.dm_db_index_operational_stats (db_id(), NULL, NULL, NULL) s

,sys.indexes i

where objectproperty(s.object_id,'IsUserTable') = 1

and i.object_id = s.object_id

and i.index_id = s.index_id

And row_lock_wait_count <> 0

6. CPU Usage History

----------------------------

SELECT

objectid as [Object_Id]

, getdate() As SampleDateTime

, total_cpu_time

, total_execution_count

, number_of_statements

FROM (

SELECT

qs.sql_handle

, sum(qs.total_worker_time) as total_cpu_time

, sum(qs.execution_count) as total_execution_count

, count(*) as number_of_statements

FROM

sys.dm_exec_query_stats qs

GROUP BY qs.sql_handle ) dt

Cross Apply sys.dm_exec_sql_text(dt.sql_handle) eqp

WHERE ObjectId is not null

7. I/O Usage History

-----------------------------------

Select ObjectId As [Object_ID], GetDate() as SampleDateTime

, (total_logical_reads/Cast(execution_count as Decimal(38,16))) as avg_logical_reads

, (total_logical_writes/Cast(execution_count as Decimal(38,16))) as avg_logical_writes

, (total_physical_reads/Cast(execution_count as Decimal(38,16))) as avg_physical_reads

, Execution_Count

from (

select

Sum(total_logical_reads) as total_logical_reads

, Sum(total_logical_writes) as total_logical_writes

, Sum(total_physical_reads) as total_physical_reads

, Sum(execution_count) as execution_count

, sh.objectid

from sys.dm_exec_query_stats

Cross Apply sys.dm_exec_sql_text(sql_Handle) sh

Where objectId is not null

Group By objectid

) SubQry

8. Table Data Growth volume History

------------------------------------------

DECLARE @START_DATE datetime,

@END_DATE datetime

SET @START_DATE = getdate()

SET @END_DATE = getdate()

IF @START_DATE = @END_DATE

SET @END_DATE = Convert(varchar(10), DateAdd(day, 1, @END_DATE), 101)

SELECT startdt.object_id, object_name(startdt.object_id) as [Object_Name], startdt.[RowCount] as Starting_Row_Count,

enddt.[RowCount] as Ending_Row_Count,

pct_Growth = case when startdt.[rowcount] = 0 then 0

else (enddt.[rowCount] - startdt.[rowCount])/Convert(Decimal(38, 16), startdt.[rowcount])

end

FROM Benchmark_Table_DataVolumeHistory startdt

INNER JOIN (

Select object_id, min(SampleDateTime) as minSampleDateTime,

max(SampleDateTime) As maxSampleDateTime

FROM Benchmark_Table_DataVolumeHistory

WHERE SampleDateTime >= Convert(varchar(10), Getdate(), 101)

And SampleDateTime < @END_DATE

GROUP BY object_Id

) sub

ON startdt.object_id = sub.object_id

and startdt.SampleDateTime = minSampleDateTime

INNER JOIN BenchMark_Table_DataVolumeHistory enddt

ON enddt.object_id = sub.object_id

and enddt.SampleDateTime = maxSampleDateTime

SELECT UseCaseDesc, startdt.object_id, object_name(startdt.object_id) as [Object_Name], startdt.[RowCount] as Starting_Row_Count,

enddt.[RowCount] as Ending_Row_Count,

pct_Growth = case when startdt.[rowcount] = 0 then 0

else (enddt.[rowCount] - startdt.[rowCount])/Convert(Decimal(38, 16), startdt.[rowcount])

end

FROM Benchmark_Table_DataVolumeHistory startdt

INNER JOIN (

Select uc.UseCaseDesc, hist.object_id, min(SampleDateTime) as minSampleDateTime,

max(SampleDateTime) As maxSampleDateTime

FROM Benchmark_Table_DataVolumeHistory hist

INNER JOIN Benchmark_useCase_Object_assn ua

ON hist.object_id = ua.object_id

inner join benchmark_usecase uc

on ua.usecaseid = uc.usecaseid

WHERE SampleDateTime >= Convert(varchar(10), Getdate(), 101)

And SampleDateTime < @END_DATE

GROUP BY hist.object_Id, usecasedesc

) sub

ON startdt.object_id = sub.object_id

and startdt.SampleDateTime = minSampleDateTime

INNER JOIN BenchMark_Table_DataVolumeHistory enddt

ON enddt.object_id = sub.object_id

and enddt.SampleDateTime = maxSampleDateTime

Friday, July 11, 2008

MOC Client Conversation Logger

MOC Client Conversation Logger can be downloaded from

http://www.kellerinc.net/Downloads/tabid/56/Default.aspx

http://www.kellerinc.net/Downloads/tabid/56/Default.aspx

Tuesday, July 08, 2008

Hyber Threading - HT

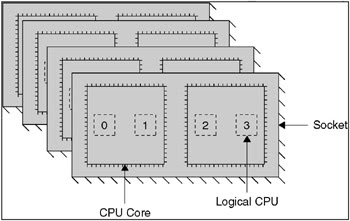

A thread is a unit of execution that runs on a CPU that can only execute one thread at a time. However, a CPU is very good at switching between threads very quickly to share its power, giving the illusion of simultaneous execution.

Hyper-Threading is a technology found only on Intel processors, that tries to make more efficient use of the CPU by duplicating the architectural state to provide two logical CPUs.

This allows more than 1 thread to be scheduled and enables simultaneous execution when the threads require different processor functions. The effect of this is that you will see two logical CPUs to which the operating system can assign different threads. However, it is only the architectural state that is duplicated, not the physical execution engine. Figure shows a 4 dual-core system with Hyper-Threading enabled, giving 16 logical processors.

Hyper-Threading is only ever going to give you a maximum of 1.3 times nonHyper-Threaded performance and in practice it will be closer to 1.1 to 1.15 times. This knowledge helps put any decision about enabling it back into perspective.

Disable Hyper-Threading unless you have the time to test it thoroughly. Don’t use it on Windows 2000 as it’s not Hyper-Threading aware.

SQL Server license Sacket level not at core CPU

Multi-core effectively means more than one CPU on the same chip so when you buy a dual-core processor you’re actually buying two CPU cores, Windows will see two CPUs, and you should treat it for capacity planning purposes like two single-core CPUs.

It gets even better from a licensing perspective because Microsoft per-processor licensing is per socket, not per core. Socket refers to the physical socket that you plug the processor into.

This licensing model gives Microsoft a competitive advantage over its competitors in the database market, where others charge customers a license fee per core. This is great news for SQL Server customers because a server with four dual-core CPUs will perform comparably with an eight single-core server but at half the license cost.

Image from book

Multi-core processors are only licensed per socket so if you can get an 8-core processor you’ll only need a Windows and SQL Server license for 1 socket!

Monday, July 07, 2008

Setting Unrestricted growth

Problem:

On changing the log file growth to unrestricted. Using the management studio UI, simply clicking on the properties of the DB and then files and then change the option to unrestricted growth on the log file and hitting ok and check the properties, it flips back to unrestricted

Database file was created with the maxsize defined, we altered the database and change the maxsize to unlimited. But afterwards it reverted to the previous max size.

Resolution:

It is by design as advised on

ON BOL:

UNLIMITED

Specifies that the file grows until the disk is full. In SQL Server 2005, a log file specified with unlimited growth has a maximum size of 2 TB, and a data file has a maximum size of 16 TB.

Workaround:

To get this 'unrestricted' radio button enabled for the log file growth again is to;

- backup db

- detach db

- rename log file to something xx_old.ldf

- attach db again using only the MDF file again and letting SQL create a new log file for you

- delete xx_old.ldf file if all successful

On changing the log file growth to unrestricted. Using the management studio UI, simply clicking on the properties of the DB and then files and then change the option to unrestricted growth on the log file and hitting ok and check the properties, it flips back to unrestricted

Database file was created with the maxsize defined, we altered the database and change the maxsize to unlimited. But afterwards it reverted to the previous max size.

Resolution:

It is by design as advised on

ON BOL:

UNLIMITED

Specifies that the file grows until the disk is full. In SQL Server 2005, a log file specified with unlimited growth has a maximum size of 2 TB, and a data file has a maximum size of 16 TB.

Workaround:

To get this 'unrestricted' radio button enabled for the log file growth again is to;

- backup db

- detach db

- rename log file to something xx_old.ldf

- attach db again using only the MDF file again and letting SQL create a new log file for you

- delete xx_old.ldf file if all successful

Sunday, July 06, 2008

Free SQL Server tools that might make your life a little easier

check out on below link for free SQL Server tools that might make your life a little easier

http://weblogs.sqlteam.com/mladenp/archive/2007/11/20/free-sql-server-tools-that-might-make-your-life-a.aspx

http://weblogs.sqlteam.com/mladenp/archive/2007/11/20/free-sql-server-tools-that-might-make-your-life-a.aspx

Wednesday, July 02, 2008

Verify the TCP Port

Verify the server is listening on the correct port (1433 in my case). Do this by running the following command in a command prompt window:

netstat -ano | findstr 1433

netstat -ano | findstr 1433

Terminal Citrix Server Connection issue

1. On Run type “regedit”

2. Go to Registry entry “HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\MSLicensing\Store”

3. Delete the LICENSE001

4. Try connecting on Citrix Terminal Server

5. Let me know if it works.

2. Go to Registry entry “HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\MSLicensing\Store”

3. Delete the LICENSE001

4. Try connecting on Citrix Terminal Server

5. Let me know if it works.

Tuesday, July 01, 2008

Missing performance counters X64 OS with SQL2000 and SQL 2005

If you’re using x64 Windows and x64 SQL Server you shouldn’t have any issues. System Monitor will by default have the correct counters loaded and available for you to view and the same applies to IA64 Windows and IA64 SQL Server deployments.

However, if you’re using SQL Server in a Windows-On-Windows (WOW) mode on x64 (which is to say that x64 Windows is emulating a 32-bit environment to facilitate you running an x86 instance of SQL Server), When you start System Monitor on the x64 host, you’ll find that none of the counters relating to the 32-bit SQL Server instance are available. This is be cause on an x64 host you’ll be running an x64 version of System Monitor by default.

In the logfile I overlooked Event ID 1021:

Windows cannot open the 32-bit extensible counter DLL MSSQLSERVER in a 64-bit environment. Contact the file vendor to obtain a 64-bit version. Alternatively, you can open the 32-bit extensible counter DLL by using the 32-bit version of Performance Monitor. To use this tool, open the Windows folder, open the Syswow64 folder, and then start Perfmon.exe.

Resolution:

Start C:\WINDOWS\SysWOW64\Perfmon.exe, this is the 32-bit version, and all the counters are there.

Monitoring the 32-bit SQL Server instance in the scenario will require running a 32-bit instance of System Monitor, and this can be achieved by running the following

mmc /32perfmon.msc

Once the 32-bit MMC console has started with the Perfmon snap-in, the 32-bit SQL Server counters should be available for addition to your log.

However, if you’re using SQL Server in a Windows-On-Windows (WOW) mode on x64 (which is to say that x64 Windows is emulating a 32-bit environment to facilitate you running an x86 instance of SQL Server), When you start System Monitor on the x64 host, you’ll find that none of the counters relating to the 32-bit SQL Server instance are available. This is be cause on an x64 host you’ll be running an x64 version of System Monitor by default.

In the logfile I overlooked Event ID 1021:

Windows cannot open the 32-bit extensible counter DLL MSSQLSERVER in a 64-bit environment. Contact the file vendor to obtain a 64-bit version. Alternatively, you can open the 32-bit extensible counter DLL by using the 32-bit version of Performance Monitor. To use this tool, open the Windows folder, open the Syswow64 folder, and then start Perfmon.exe.

Resolution:

Start C:\WINDOWS\SysWOW64\Perfmon.exe, this is the 32-bit version, and all the counters are there.

Monitoring the 32-bit SQL Server instance in the scenario will require running a 32-bit instance of System Monitor, and this can be achieved by running the following

mmc /32perfmon.msc

Once the 32-bit MMC console has started with the Perfmon snap-in, the 32-bit SQL Server counters should be available for addition to your log.

DMV & DMF

There are two types of dynamic management views and functions:

* Server-scoped dynamic management views and functions. These require VIEW SERVER STATE permission on the server.

* Database-scoped dynamic management views and functions. These require VIEW DATABASE STATE permission on the database.

To query a dynamic management view or function requires SELECT permission on object and VIEW SERVER STATE or VIEW DATABASE STATE permission

Dynamic management views and functions have been organized into the following categories.

1. Common Language Runtime Related Dynamic Management Views

2. I/O Related Dynamic Management Views and Functions

3. Database Mirroring Related Dynamic Management Views

4. Query Notifications Related Dynamic Management Views

5. Database Related Dynamic Management Views

6. Replication Related Dynamic Management Views

7. Execution Related Dynamic Management Views and Functions

8. Service Broker Related Dynamic Management Views

9. Full-Text Search Related Dynamic Management Views

10. SQL Server Operating System Related Dynamic Management Views

11. Index Related Dynamic Management Views and Functions

12. Transaction Related Dynamic Management Views and Functions

Excerpt from http://msdn.microsoft.com/en-us/library/ms188754.aspx

DMV Scripts are on Scripts Repository: http://www.microsoft.com/technet/scriptcenter/scripts/sql/sql2005/default.mspx?mfr=true

* Server-scoped dynamic management views and functions. These require VIEW SERVER STATE permission on the server.

* Database-scoped dynamic management views and functions. These require VIEW DATABASE STATE permission on the database.

To query a dynamic management view or function requires SELECT permission on object and VIEW SERVER STATE or VIEW DATABASE STATE permission

Dynamic management views and functions have been organized into the following categories.

1. Common Language Runtime Related Dynamic Management Views

2. I/O Related Dynamic Management Views and Functions

3. Database Mirroring Related Dynamic Management Views

4. Query Notifications Related Dynamic Management Views

5. Database Related Dynamic Management Views

6. Replication Related Dynamic Management Views

7. Execution Related Dynamic Management Views and Functions

8. Service Broker Related Dynamic Management Views

9. Full-Text Search Related Dynamic Management Views

10. SQL Server Operating System Related Dynamic Management Views

11. Index Related Dynamic Management Views and Functions

12. Transaction Related Dynamic Management Views and Functions

Excerpt from http://msdn.microsoft.com/en-us/library/ms188754.aspx

DMV Scripts are on Scripts Repository: http://www.microsoft.com/technet/scriptcenter/scripts/sql/sql2005/default.mspx?mfr=true

Subscribe to:

Posts (Atom)